Develop and Deploy Multi-Container Applications

Posted on Fri 06 November 2020 in Software • 12 min read

Following on with previous posts on this blog. This post will be going through how to develop & deploy a multi-container application, our application (albeit basic) will allow users to input a number which will correspond to the index in the Fibonacci sequence, and our application will respond with the computed number. Redis will store this number locally to give users a list of recently requested indexes, and PostgreSQL will store the input and output. More specifically we will be using the following technologies:

| Technology | Use |

|---|---|

| Docker | Docker will be used to containerization of the services inside our app |

| Amazon Web Services (AWS) Elastic Beanstalk | Elastic Beanstalk will manage the deployment of our application |

| Vue | Vue is the front-end JavaScript framework that we will use |

| Express | Express is responsible for the API between Redis, PostgreSQL and Vue |

| Redis | Redis will store/retrieve any local data used by our users |

| PostgreSQL | PostgreSQL will be our database |

| Nginx | Nginx will handle the routing between our services |

| Github Actions | Github Actions will be our CI/CD platform for running tests before deploying |

Let's start by diving into each of the services inside our application and how to set them up.

This post won't go into how to Dockerize each service in particular, more so how to connect them all together.

Find all the source code for this project at: https://github.com/JackMcKew/multi-docker

This post is apart of a series on Docker/Kubernetes, find the other posts at:

- Intro to Docker

- Develop and Develop with Docker

- Intro to Kubernetes

- Developing with Kubernetes

- Deploying with Kubernetes

- Deploy a Node Web App to AWS Elastic Beanstalk with Docker

Vue

Find all the source code for the front end client at: https://github.com/JackMcKew/multi-docker/tree/master/client

Vue is a JavaScript framework for creating user interfaces. We will be using it for the 'front-end' portion of the application. Vue can be installed through npm and once installed, we can run vue create project_name in the command line to create a template project for us. There is many options to enable in the creation of a project, a good option is to enable both unit testing & typescript. Once the project has been created, we can navigate into the directory and run npm run serve, this will set up our Vue project to enable us to visit localhost:8080.

Now to set up the user interface for our users to input the index of the fibonacci sequence they wish to calculate, we need to set up a new page for the users to land on. This will involve changing things in 3 places: components, views and router.

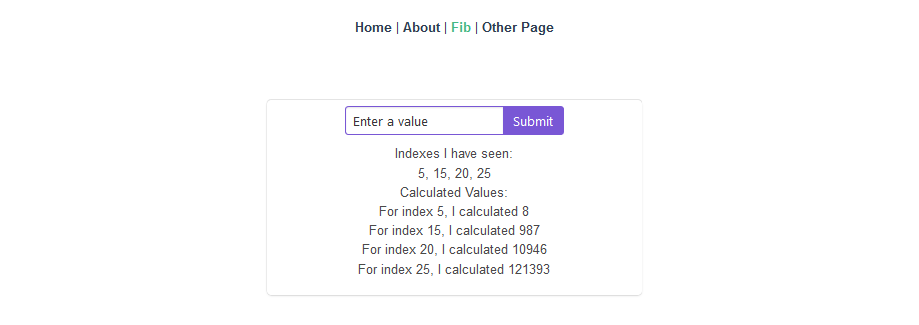

This is what we will be building with Vue:

FibInputForm Component

Components are pieces of user interface that we can access in multiple parts of our application, we build a component which will contain both the HTML and javascript for driving the user input, and for displaying the output retrieved from Redis or PostgreSQL. Vue components are typically comprised of a template block and a corresponding script and style block. When writing the template for a component, there is numerous Vue specific attributes that we can provide the elements in the HTML. For this project we will make use of Bulma/Buefy CSS (which can be installed with npm install bulma or npm install buefy) for our styling.

We create a file named FibInputForm.vue inside project_name/src/components with the contents:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 | |

To briefly cover the functionality above, the template is built up of 3 parts, the input form where users submit an index to query, a list of the latest indexes as stored in Redis separated by a comma and finally a list of the calculated as retrieved from the PostgreSQL database. We use axios to interface we the API that we will create with express. We query the API upon load, and the page is always reloaded when submit is pressed. Now that this has been exported, it can be imported from any other point in our web application and placed in with a <FibInputForm/> element! Neat!

FibInputPage View

Now that we have our component, we need a page to put it on! We create a new file within project_name/src/views named FibInputPage.vue with the contents:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | |

As we can see above, we've imported our neat little FibInputForm and used it after placing it in a centered section. Again we export the page view so we can import into the router to make sure it's linked to a URL.

Routing the Page

Lastly for Vue, we need to set up a route so users can reach our page, both within the vue-router and on the main page (App.vue). Routes are all defined within project_name/router/index.ts. So we need to add in a new one for our FibInputPage by adding the following object into the routes array:

1 2 3 4 5 6 7 8 9 | |

Next to ensure the route is accessible from a link on the page, add a router-link element into the template of App.vue:

1 | |

Redis

Find all the source code for the redis service at: https://github.com/JackMcKew/multi-docker/tree/master/worker

Redis is an open source, in-memory data store, we give it a key and a value, which it'll store. Later we can ask with the key, and get the value back. We are going to set up two parts to make this service work as expected. The redis runtime is managed for us directly from using the redis image as provided on Docker Hub, but we need to make a node.js project to interface with it.

We do this by creating 3 files: package.json, index.js and keys.js. package.json defines what dependencies need to be installed, and how to run the project. index.js manages the redis client and contains the functionality for calculating the fibonacci sequence when given an index. keys.js contains any environment variables that the project may need. In particular we use environment variables so docker-compose can link all the services together later on.

Here is the code for the core of this project, index.js:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | |

As we can see, we initialise a redis client as per the environment variables set in keys.js, we create a duplicate of the client because we wish to interact with it (must duplicate the client otherwise when communicating we'll end up with one big mess). We define our ever so special fibonacci function (this is slow on purpose) and finally we set a method that when given a message will communicate with redis for us.

PostgreSQL

Find all the source code for the PostgreSQL service at: https://github.com/JackMcKew/multi-docker/tree/master/server

We are going to use the PostgreSQL service part of our project to contain the interface with the database, and the API with express. Very similar to our redis project, we need a package.json, index.js and keys.js. Let's dive straight into the code inside index.js:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 | |

We do a series of things here:

- Initialise our

expressrouter which will be the API - Create a client for the PostgreSQL database (ensuring to create a table if it doesn't exist)

- Create another client for our redis service, which will publish any keys requested as to show to the user later on

- Set up our API end points, to either get all the indexes ever requested or the latest

- Set up the API post method, which will handle sending the request to redis and storing in the database

- Listen on the port for any incoming requests!

Nginx

Nginx in this project helps us create all the connections between the services and for them all to play nicely. How nginx works is by defining any connections in a configuration file, and pass that configuration file upon runtime.

This is our default.conf nginx configuration for this project:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

We are doing a series of things once again:

- Our Vue front end listens on port 8080 by default, so that's where the client connection lives

- Our API back-end (PostgreSQL) is listening on port 5000

- Our nginx runtime will listen on port 80 and route as required

We also set up a few locations, these help nginx 'finish' the route. / means any incoming connection, pass it off to the front end to render. If any request connection comes in containing /api then we want to pass that request to the service, so we rewrite the URL to be the correct URL.

Docker Compose

Now that we've got our individual services all configured, we need a way to run them all at the same time. We need to create a docker-compose.yml which will contain all the environment variables, and how each service depend/connect to each other so we can run it all!

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 | |

Here we are setting up all of the docker containers we wish to run in parallel that will make up our entire project:

- PostgreSQL Container

- We use the postgres image on Docker Hub and must pass in the default password to get into it

- Redis Container

- We use the redis image on Docker Hub (that easy!)

- API Container

- This contains the code behind the API which interfaces with redis & postgres

- Client Container

- Our Vue frontend that the users will see

- Worker Container

- This contains the code to calculate the fibonacci sequence by interfacing with the redis runtime

- Nginx Container

- Our nginx container that'll handle all the routing in between each of the services, this is what is exposed on port 8000 on the local PC when we run all these containers

Note that our environment variables (which were set in keys.js earlier), are just the name of the service given in the docker-compose.yml file. Docker Compose handles all the renaming when each service is connected up for us! How awesome is that!

Github Actions

Now that we've set all of services and make sure they place nice in Docker Compose, it's time to implement CI/CD with Github Actions so whenever we push new versions of our code, it'll automatically test that everything works and deploy our new version of the application. We do this by creating a test-and-deploy.yml within .github/workflows/ which contains:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 | |

We are doing the following steps:

- Get the latest copy of all the source code

- Build & Test our application to make sure it works

- Build & Publish each container to Docker Hub so any other deployment service can pull directly from there

- Deploy our application to Elastic Beanstalk

Docker Hub

Everything's now set up! For another user or a deployment service to get each of the images for the services they've created they can now simply run docker run jackmckew/multi-docker-client and that's it! It should run on any operating system provided Docker is installed, how cool is that!

Deploying to AWS Elastic Beanstalk

Now we want to deploy this application to Elastic Beanstalk, that means we need to create a Dockerrun.aws.json which is very similar to that of the docker-compose.yml. The contents of the json file will be:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | |

Now provided we've set up the following:

- RDS - Redis

- ElasticCache - PostgreSQL

- VPC - Security Group

- Initialized all the environment variables in the EB instance

We should be able to push to Github and see our application be deployed!